Learn deeply, but baby, don’t fear the Skynet

Who’s afraid of AlphaGo? Everyone who’s anyone, you might think. Elon Musk, Bill Gates, and Stephen Hawking have all expressed concern about the “existential threat” of AI, just as “deep learning” neural networks are revolutionizing the AI field. Should we be scared for our jobs? Or even our species? Fear not, I have answers! They are, respectively, “maybe,” and “don’t be ridiculous.”

It is right to describe recent AI developments as a “breakthrough,” as Frank Chen of Andreessen Horowitz does in this excellent presentation which summarizes both the history and the state of the art. Chen ends with a bold call to action:

All the serious applications from here on out need to have deep learning and AI inside … it’s just going to be a fundamental technique that we expect to see in all serious applications moving forward … as fundamental as mobile and cloud were in the last 5-10 years.

And he’s right! But “deep learning” is not even remotely in the same galaxy as the “AI” that Musk, Hawking, and Gates are worried about it. It may be — indeed, probably is — a step along that very long road. But what’s interesting and powerful about deep learning is not that it makes machines “smart” in a way that the word is used with relation to people or animals.

What’s interesting about deep learning is that while there’s nothing magical or genie-like about it — as Geordie Wood points out in Wired, “it’s really just simple math executed on an enormous scale” — its “programs” are ultimately matrixes of values that have been trained, rather than lines of code which are written (although many lines of traditional code go into the training, of course.)

What’s powerful about it, what’s so exciting, is that it excels at whole fields of problems that are extremely difficult to solve using traditional software techniques: categorization, pattern recognition, pattern generation, etcetera.

It’s not often that whole new kinds of problems suddenly become amenable to better solutions. The last time it happened was when the smartphone ate the world. So deep learning really is an awesome and exciting new development. (As are other forms of AI research, albeit to a lesser extent. The loose taxonomy is that “deep learning” is part of “machine learning” which is part of “AI.”)

Let me offer some primers, while I’m here:

- The free online book Neural Networks and Deep Learning.

- The playground for Google’s open-source TensorFlow machine-learning library, which you can see in action in Chen’s presentation.

- A writeup of license plate recognition with TensorFlow by Matthew Earl.

- A Scientific American visualization of neural networks.

- Everyone who’s anyone is making machine learning available as an API these days, including Google, IBM, Amazon, Microsoft, etc., and most recently, Apple.

But just as deep learning is a good tool for many problems for which traditional programming is bad, it is also a bad (and extremely unwieldy) tool for many problems which traditional programming can solve. I give you, as an amusing example, this hilarious piece from Joel Grus: “FizzBuzz In TensorFlow,” in which he mockingly tries to use TensorFlow to solve the famously trivial “FizzBuzz” interview problem. Spoiler alert: it does not go well.

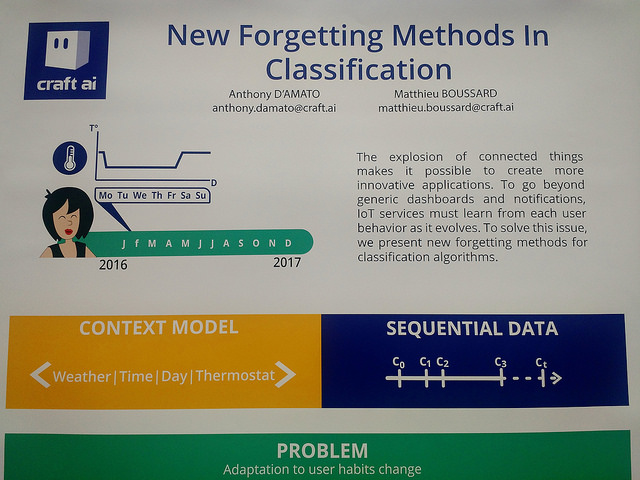

Similarly, neural networks are very far from infallible. My favorite example is “How to trick a neural network into thinking a panda is a vulture” by Julia Evans. And with machine learning comes new concerns — such as all the complex tooling around training and using it, per this excellent Stephen Merity analysis of how “we’re still far away from a world where machine learning models can live without their human tutors,” and even the potential need for machine forgetting:

So: AI (the research field) has benefited from a huge breakthrough, which is awesome and exciting and opens up whole new realms of newly accessible solutions that incumbents and startups alike can explore! This also means that jobs which consist largely of pattern recognition and responding to those patterns in fairly simple and predictable ways — like, say, driving — may be obsoleted with remarkable speed.

But AI (as in the research field) is still nowhere near AI (as in artificial intelligence remotely comparable to ours) and this very cool breakthrough hasn’t really changed that. Worry not about Skynet.

But, similarly, let us treat with the appropriate skepticism the clamor for deep-learning solutions to all human problems. Deep learning appears to be the new blockchain, in that people who don’t understand it suddenly want to solve all problems with it. I give you, for example, this call from the White House for “technologists and innovators to help reform the criminal justice system with artificial intelligence algorithms and analytics.”

There is, already, “software used across the country to predict future criminals. And it’s biased against blacks.” Any “improved” machine-learning system is, similarly, extremely likely to inherit the biases of its human tutors — cultural biases which will remain, for the foreseeable future, a problem no neural network can solve. Modern AI is no demon, but it’s no panacea either.

Newsletters

Newsletters

0

SHARES